Note: This review aims to provide a high level summary for an applied audience. The avid reader is encouraged to explore additional resources below.

2022-02-22

Paper publication date AlphaCode applies an encoder-decoder transformer based model to the task of generating solutions for competitive programming problems.

Overall, this paper definitely represents progress in the domain of deep learning for code generation (program synthesis). It is also very well written, easy to read and provides extensive discussions on tricks to make sampling efficient (GOLD training, tempering), limitations of the model, applications and societal impact. IMHO, a couple of interesting areas for improvement are related to training an equally capable model with less information (e.g., without metadata and value conditioning), and perhaps more efficient candidate selection.

[1]: Chen, M., Tworek, J., Jun, H., Yuan, Q., Pinto, H.P.D.O., Kaplan, J., Edwards, H., Burda, Y., Joseph, N., Brockman, G. and Ray, A., 2021. Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374.

[2]: Austin, J., Odena, A., Nye, M., Bosma, M., Michalewski, H., Dohan, D., ... & Sutton, C. (2021). Program synthesis with large language models. arXiv preprint arXiv:2108.07732.

[3]: Xu, F.F., Alon, U., Neubig, G. and Hellendoorn, V.J., 2022. A Systematic Evaluation of Large Language Models of Code. arXiv preprint arXiv:2202.13169.

[4]: Pradel, M. and Chandra, S., 2021. Neural software analysis. Communications of the ACM, 65(1), pp.86-96.

[5]: Lu, S., Guo, D., Ren, S., Huang, J., Svyatkovskiy, A., Blanco, A., Clement, C., Drain, D., Jiang, D., Tang, D. and Li, G., 2021. CodeXGLUE: A machine learning benchmark dataset for code understanding and generation. arXiv preprint arXiv:2102.04664.

[6]: Feng, Z., Guo, D., Tang, D., Duan, N., Feng, X., Gong, M., Shou, L., Qin, B., Liu, T., Jiang, D. and Zhou, M., 2020. Codebert: A pre-trained model for programming and natural languages. arXiv preprint arXiv:2002.08155.

State of Deep Learning for Code Generation (DL4Code)

State of Deep Learning for Code Generation (DL4Code) CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation | Paper Review

CodeXGLUE: A Machine Learning Benchmark Dataset for Code Understanding and Generation | Paper Review Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021)

Recent Breakthroughs in AI (Karpathy, Johnson et al, Feb 2021) 2023 Year in Review

2023 Year in Review Top 10 Machine Learning and Design Insights from Google IO 2021

Top 10 Machine Learning and Design Insights from Google IO 2021 Neural Extractive Summarization with BERT

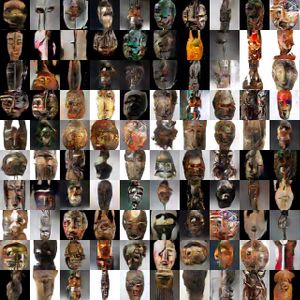

Neural Extractive Summarization with BERT ART + AI — Generating African Masks using (Tensorflow and TPUs)

ART + AI — Generating African Masks using (Tensorflow and TPUs) 10 Predictions on the Future of Cloud Computing by 2025 - Insights from Google Next Conference

10 Predictions on the Future of Cloud Computing by 2025 - Insights from Google Next Conference